Real-time Reinforcement Learning

Consider a futuristic scenario in which a team of chef robots are working together to prepare omelets. While we would like these robots to use the most powerful and reliable models possible, it is also imperative that the robots keep up with the pace of the changing world. Ingredients need to be added at the right time and the omelet must be monitored so that everything cooks evenly. If the robots don’t act quickly enough, the omelet will surely burn. They must also cope with uncertainty regarding the actions of their partner and adapt accordingly.

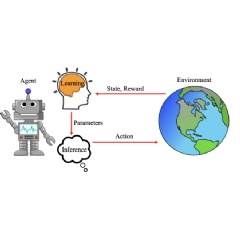

Real-time Reinforcement LearningIn reinforcement learning (RL), it is typically assumed that the agent interacts with the environment in an idealized turn-based game. The environment is assumed to "pause" as the agent computes its actions and learns from experiences. Likewise, the agent is assumed to "pause" as the environment transitions to its next state.

The diagram below highlights two key difficulties that are prominent in real-time environments that are not encountered in standard turn-based conceptions of RL. The first is that the agent may not act at every step in the environment due to high action inference times. This leads to a new source of sub-optimality experienced by agents that we call inaction regret. The second difficulty highlighted is that actions are computed based on outdated states resulting in actions that have a delayed impact in the environment. This leads to another new source of sub-optimality that is particularly prominent in stochastic environments that we call delay regret. This blog post summarizes two complementary ICLR 2025 papers from our lab at Mila. The first presents a solution for minimizing inaction regret and the second presents a solution for minimizing delay regret.

Minimizing Inaction: Staggered InferenceIn the first paper, we are motivated by the fact that the level of inaction grows as the model parameter count grows within the standard turn-based RL interaction paradigm. The RL community must then consider a new deployment frameworks to enable foundation model scale RL within the real-world. Towards this end, we propose a framework for asynchronous multi-process inference and learning.

In this framework, we allow the agent to make maximal use of its available compute to perform asynchronous inference and learning. Specifically, we propose two algorithms in our paper for staggering inference processes. The basic idea is that we want to adaptively offset parallel inference processes so that they take actions in the environment at regular faster intervals. We demonstrate that with either of our algorithms it is possible to deploy an arbitrarily large model with an arbitrarily large inference time such that it acts at every environment step and eliminates inaction regret when given sufficient available compute.

Real-time ExperimentsWe put our new framework to the test on real-time simulations of the Game Boy and Atari that are synced to the frame rate and interaction protocols that humans experience when they actually play these games on their host consoles. Below we highlight the superior performance of asynchronous inference and learning in successfully catching Pokémon in Pokémon Blue when using a model with 100M parameters. Note that the agent must not only act quickly, but also constantly adapt to novel scenarios to make progress.

In our paper, we also highlight the performance of our framework in real-time games that prioritize reaction time like Tetris. We show that performance degrades much more slowly for larger model sizes when using asynchronous inference and learning. Yet, the fact that performance degrades at all for large models is because delay regret is still unaddressed.

Minimizing Inaction and Delay with a Single Neural NetworkOur second paper presents an architectural solution for minimizing inaction and delay when deploying a neural network in real-time environments, where staggering inference is not an option. Sequential computation highlights the inefficiencies in deep networks, where each layer takes approximately the same amount of time to execute. As a result, the total latency increases proportionally with network depth, leading to slow responses.

This limitation is similar to that of early CPU architectures, where instructions were processed one after another, resulting in underutilized resources and increased execution time. Modern CPUs address this with pipelining, a technique that allows different stages of multiple instructions to be executed in parallel. Inspired by this principle, we introduce parallel computation in neural networks. By computing all layers at once, we reduce inaction regret.

To further reduce delay, we introduce temporal skip connections that allow new observations to reach deeper layers more quickly, without having to pass through each preceding layer.

Our key contribution is the combination of parallel computation and temporal skip connections to reduce inaction and delay regrets in real-time systems.

The figure below illustrates this. The y-axis represents layer depth, starting with the initial observation, followed by the first- and second-layer representations, and ending with the action output, while the x-axis encodes time. An arrow therefore, represents one layer’s computation, which consumes δ seconds.

In the baseline (left), a new observation must traverse the full depth N sequentially, so the action becomes available after N δ seconds. Parallel computation of layers (centre) reduces inaction regret by increasing throughput from one inference every N δ to one inference every δ. Finally, temporal skip connections (right) reduce the total delay from Nδ to δ by allowing the newest observation to reach the output after a single δ. Conceptually, this addresses delay by trading off network expressiveness with the need to incorporate recent, time-sensitive information to the greatest extent possible.

Additionally, augmenting inputs with past actions/states restores the Markovian property, improving learning stability even in the presence of delay. This reduces both delay and optimization-related regret as showcased by our results. The rightmost columns in the figure below correspond to our best-performing setup: using parallel computation, temporal skip connections, and state augmentation.

Combining BothStaggered asynchronous inference and temporal skip connections are orthogonal, but complementary. While temporal skip connections reduce the delay between observation and action within a model, staggered inference ensures actions are delivered consistently even with large models. Together, they decouple model size from interaction latency, enabling the deployment of expressive, low-latency agents in real-time settings. This has strong implications for high-stakes domains like robotics, autonomous vehicles, and financial trading, where responsiveness is critical. By enabling large models to act at high frequencies without compromising expressiveness, these methods mark a step toward making reinforcement learning practical for real-world, latency-sensitive applications.

( Press Release Image: https://photos.webwire.com/prmedia/7/340207/340207-1.png )

WebWireID340207

This news content was configured by WebWire editorial staff. Linking is permitted.

News Release Distribution and Press Release Distribution Services Provided by WebWire.